I’m not sure I understand. I’ve already been running ffmpeg from the command line and it’s been using multiple cores but default. What’s the difference, what’s the new behavior?

Maybe this?

Every instance of every such component was already running in a separate thread, but now they can actually run in parallel.

Good old RTFM lol

before you could tell an encoder to run multiple threads, but everything outside of the encoder would run effectively single threaded.

now you (should) be able to have all the ffmpeg components, decoder, encoder, filters, audio, video, everything all run parallel

Oooo. Will it be automatic? Or do you need to pass a flag?

I was using gnu parallel before with ffmpeg. Is this any different and better?

GNU Parallel allows multi-process, which generally tends to be less efficient than multi-threading. I can’t speak to the specifics of your use vs. FFmpeg’s refactoring, though.

This is the best summary I could come up with:

The long-in-development work for a fully-functional multi-threaded FFmpeg command line has been merged!

FFmpeg is widely-used throughout many industries for video transcoding and in today’s many-core world this is a terrific improvement for this key open-source project.

The patches include adding the thread-aware transcode scheduling infrastructure, moving encoding to a separate thread, and various other low-level changes.

Change the main loop and every component (demuxers, decoders, filters, encoders, muxers) to use the previously added transcode scheduler.

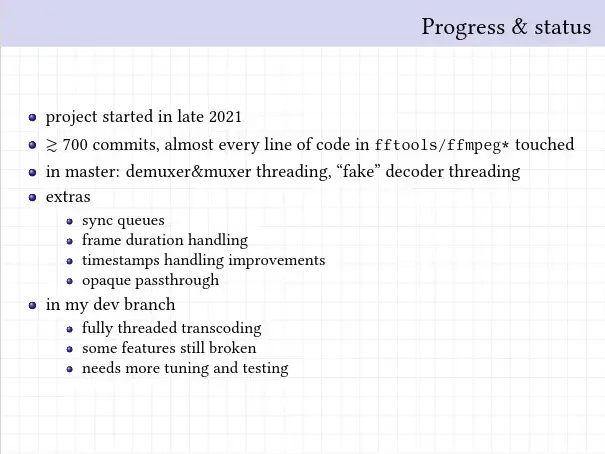

There’s a recent presentation on this work by developer Anton Khirnov.

It’s terrific seeing this merged and will be interesting to see the performance impact in practice.

The original article contains 226 words, the summary contains 103 words. Saved 54%. I’m a bot and I’m open source!