This article (haven’t watched the video yet) makes me feel ancient.

Like, it’s clearly written by someone who didn’t live through the CRT era. It seems to be repeating things they heard but didn’t understand and, frustratingly, that includes not mentioning what the actual rating of the monitor is in KHz, which is really the only thing that matters.

I’m old and my knees hurt. That’s the only point I’m making here.

I, too, miss the days when you could write literally anything in XF86Config and that’s the signal it would send to your monitor. There was a warning in the docs that you could easily fry your monitor by sending a signal that it couldn’t handle that would cause physical damage so please be careful.

Also, the good monitors came with an all-plastic screwdriver attached on the inside of the case, so that you would have one available that you couldn’t electrocute yourself with on the big capacitor since at that point you’d already revealed that you planned to open the thing up and start fuckin with it.

It was wonderful days

I would have thought the plastic screwdriver was more likely to be able to adjust variable inductors/capacitors with minimal interference? Using a metal screwdriver you have to adjust, move it away check result since the presence of the screwdriver adjusts the result too.

Oh, it might be that. That actually makes more sense.

I just know that I always heard it as so you wouldn’t electrocute yourself, and that the first time I opened up a monitor for something and actually found one, I was beyond delighted

Can’t wait to play highly competitive game (moon buggy) in 700hz on my gaming rig (amstrad)

Defender would also be ideal for this monitor.

This was normal back in the days of CS 1.5/1.6. People would play at 640x480 on a monitor that could handle 1280x960 because they could drive 640x480 at like 150+hz.

PC gamers be like “framerate dropped to 675, unplayable”

He should drop it down to a single pixel and see how fast it goes then.

It would be close to infinite refresh rate as the electron beams wouldn’t need to move. Also, the phosphors in that spot would probably burn away in a few minutes resulting in no refresh rate at all.

But for a glorious few moments you’d be in nerdvana. Worth it. Refresh rate will simply be calculated by how many electrons hit the screen per second, so not quite infinite. Bet the number is pretty high, though.

Reducing the resolution doesn’t make the beam move any less, even at 1px it would still scan and hit all the phosphors on the screen. My Viewsonic uses the entire screen regardless of resolution; if you set it to 1x1 the entire screen would be lit up. It’s not an LCD, there is no native resolution.

When you powered an old CRT down, the image would collapse to a single horizontal line, and then to a point.

I was thinking you could just cut the power to the deflecting coils.

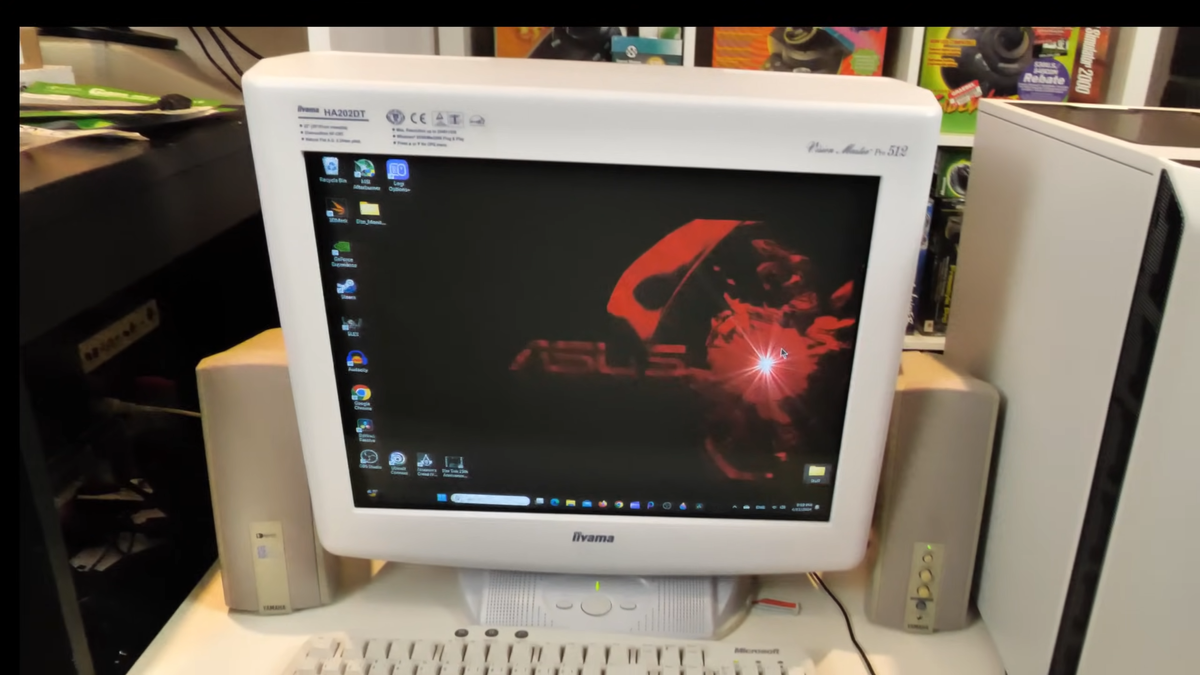

Iiyama monitors were the shit, especially if you were a designer

I still have two 19" in storage. I wonder if they are worth anything?

IPS matrix screens were kinda ok even in 2007, when I was selling them, but they had a super shitty reaction time, so everything had quite visible trails. That was the time when a good CRT was still a professional choice. But now, and for the last 10 years or so, IPS became much better, and kept their great color palette, so there are zero reasons to look elsewhere, a standard midrange monitor does the job. And if you want even less trouble, just buy a designer a used imac - that’s an almost complete workplace, and apples retina screens are also IPS. This is what several development (mobile, web) companies I worked in did.

Well, you can go fancy and buy new ones, but the used ones are in abundance - rich mac fanboys switch to every new model that comes outWell, I purchased them around 2001 so they’re retro/vintage!

I wish we got modern crts I’d buy one in a heartbeat. It’s so hard to find a good one now that isn’t either shit or just battered to hell and back

I used to heat my apartment with my dual monitor CRT setup

Can you imagine how smooth must Heroes 3 look on this?

I don’t know how to feel about this.

On the one hand, it’s cool that they pushed old electronics way beyond the known limits, but on the other hand is 120p really an accomplishment?

Even my old Commodore 64 from 1982 was able to produce around 400p when pushed to the limit (I know progressive wasn’t thing on tvs then. I’m simplifying things to not end up on a side quest here). The norm was 200p and exploring how far the electronics could go in that resolution would be far more interesting in my opinion.

If we’re just focusing on framerate, I’m pretty sure it would be possible to reach over the kHz limit with 1p.

Essentially it would be possible to run 1p led-aray at 1MHz or more…

I miss my CRT I really do.